Mathematics is the foundation of countless sciences, allowing us to model things like planetary orbits, atomic motion, signal frequencies, protein folding, and more. Moreover, it’s a valuable testbed for the ability to problem solve, because it requires problem solvers to analyze a challenge, pick out good methods, and chain them together to produce an answer.

Prior research has demonstrated the usefulness of AI that has a firm grasp of mathematical concepts. For example, OpenAI recently introduced GPT-f, an automated prover and proof assistant for the Metamath formalization language. GPT-f found new short proofs that have been accepted into the main Metamath library, the first time a machine learning-based system contributed proofs that were adopted by a formal mathematics community. For its part, Facebook also claims to have experimented successfully with math-solving AI algorithms. In a blog post last January, researchers at the company said they’d taught a model to view complex mathematical equations “as a kind of language and then [treat] solutions as a translation problem.”

“While most other text-based tasks are already nearly solved by enormous language models, math is notably different. We showed that accuracy is slowly increasing and, if trends continue, the community will need to discover conceptual and algorithmic breakthroughs to attain strong performance on math,” the coauthors wrote. “Given the broad reach and applicability of mathematics, solving math datasets with machine learning would be of profound practical and intellectual significance.”\

To measure the problem-solving ability of large and general-purpose language models, the researchers created a dataset called MATH, which consists of 12,500 problems taken from high school math competitions. Given a problem from MATH, language models must generate a sequence that reveals the final answer.

Problems in MATH are labeled by difficulty from 1 to 5 and span seven subjects, including geometry, algebra, calculus, statistics, linear algebra, and number theory. They also come with step-by-step solutions so that language models can learn to answer new questions they haven’t seen before.

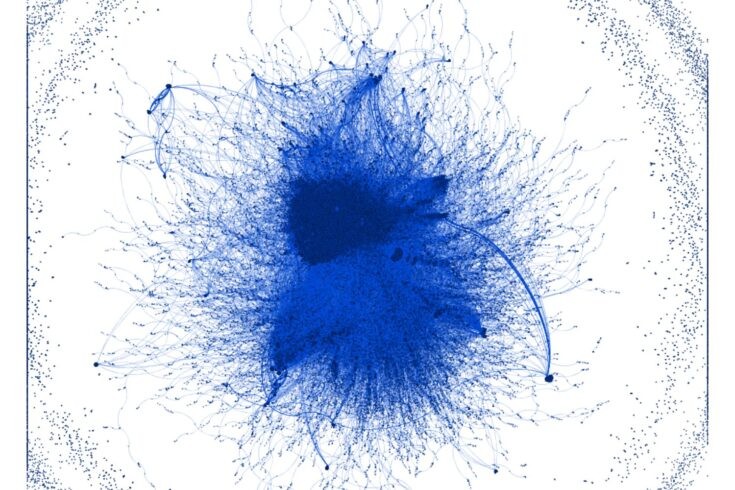

Training models on the fundamentals of mathematics required the researchers to create a separate dataset with hundreds of thousands of solutions to common math problems. This second dataset, the Auxiliary Mathematics Problems and Solutions (AMPS), comprises more than 100,000 problems from Khan Academy with solutions and over 5 million problems generated using Mathematica scripts based on 100 hand-designed modules. In total, AMPS contains 23GB of content.

As the researchers explain, the step-by-step solutions in the datasets allow the language models to use a “scratch space” much like a human mathematician might. Rather than having to arrive at the correct answer right away, models can first “show their work” in partial solutions that step toward the right answer.

Even with the solutions, the coauthors found that accuracy remained low for the large language models they benchmarked: GPT-3 and GPT-2, GPT-3’s predecessor. Having the models generate their own solutions before producing an answer actually degraded accuracy because while many of the steps were related to the question, they were illogical. Moreover, simply increasing the amount of training time and the number of parameters in the models, which sometimes improves performance, proved to be impractically costly. (In machine learning, parameters are variables whose values control the learning process.)

This being the case, the researchers showed that step-by-step solutions still provide benefits in the form of improved performance. In particular, providing models with solutions at training time increased accuracy substantially, with pretraining on AMPS boosting accuracy by around 25% — equivalent to a 15 times increase in model size.

“Despite these low accuracies, models clearly possess some mathematical knowledge: they achieve up to 15% accuracy on the easiest difficulty level, and they are able to generate step-by-step solutions that are coherent and on-topic even when incorrect,” the coauthors wrote. “Having models train on solutions increases relative accuracy by 10% compared to training on the questions and answers directly.”

The researchers have released MATH and AMPS in open source to, along with existing mathematics datasets like DeepMind’s, spur further research along this direction.

Xsolla, a global video game commerce company, announced Xsolla Drops, a new tool to augment and scale influencer and affiliate programs.

The expansion to the existing Xsolla Partner Network solution is an efficient solution for game developers and publishers to create and scale performance-based influencer and affiliate programs, the company said. Chris Hewish, president of Xsolla, made the announcement in a fireside chat on Monday at our GamesBeat Summit 2023 event, where Xsolla is a major sponsor.

Xsolla Drops provides an added layer of marketing support for game developers to increase user acquisition and incremental sales by streamlining the ability to promote their digital items, including virtual currencies, skins, NFTs, game keys, premium subscriptions, and more.

“Drops gives game developers and publishers the ability to build and reward their gaming audience easily,” said Alexander Menshikov, business head at Xsolla, in a statement. “We are helping game developers reward their fans, explore new user acquisition methods, and strengthen long-term engagement with a game’s current player base by offering exclusive in-game items and unique experiences. With Drops, developers will create game-specific campaigns with targeted audiences, delivering a personalized experience on a custom landing page with no code required.”

As user acquisition costs rise, developer budgets are taking a hit as they attempt to navigate which marketing channels are the most effective, Xsolla said. Drops take a multi-tiered campaign approach to this issue by bringing the thrill of game discovery back to the players, creating inherent value for creators, and raising a game’s overall brand awareness.

This comprehensive, new marketing tool solves the challenge of the rising costs of acquiring, engaging, and retaining players with branded websites and close collaboration with influencers, artists, esports pro gamers, celebrities, and renowned agencies.

“We were thrilled with the results of our Drops campaign with Xsolla,” said Scott Robinson, owner of SprintGP, in a statement. “The onboarding process was easy and only took a few hours from start to finish. All we needed to do was to fill out the form, upload game design assets, and provide redemption instructions for players to get a reward. Xsolla handled the rest, including web page development and marketing setup. We doubled our user base in less than 24 hours and saw a 300% increase in website traffic. We highly recommend Xsolla’s Drops tool to any game developer looking for new ways to drive user acquisition and engagement.”

4th Edition of International Conference on Mathematics and Optimization MethodsWebsite Link:https://maths-conferences.sciencefather.com/Award Nomination: https://x-i.me/XU6EInstagram: https://www.instagram.com/maths98574/Twitter: https://twitter.com/AnisaAn63544725Pinterest: https://in.pinterest.com/maxconference20022/#maths #numericals #algebra #analysis #analysis #mathmatics #numericals #number #complex #graphics #graphs